The moment it clicked for me

After sitting through a grueling four-week risk governance audit for a Tier-1 logistics provider in late 2025, I realized we’d hit a tipping point. The system — a multi-agent routing model — flagged a specific transit corridor as “statistically optimized” despite localized labor strikes and infrastructure disruptions that were obvious to anyone actually watching the region. When I challenged the operations lead, his response was unsettling in its calm.

He didn’t open live feeds.

He didn’t call the regional manager.

He just pointed at the dashboard’s 94% confidence rating and shrugged.

“The model has more data than I do,” he said.

That’s the moment I stopped thinking of these tools as “support.” We’re not just using AI anymore. In too many workplaces, we’re surrendering judgment to it — because the system feels safer than the responsibility of being the human who disagreed.

I call this cognitive bankruptcy: you’re still operating, still producing, still shipping decisions — but the mental reserves that fund skepticism are depleted. Convenience wins. Curiosity loses.

Beyond the “co-pilot” myth: when tools become cognitive authorities

For years, the reassuring pitch was that AI would be a co-pilot. The machine handles the boring parts; humans do strategy and ethics. Nice story. The reality I see in 2026 is harsher: many organizations aren’t building co-pilots.

They’re building cognitive authorities.

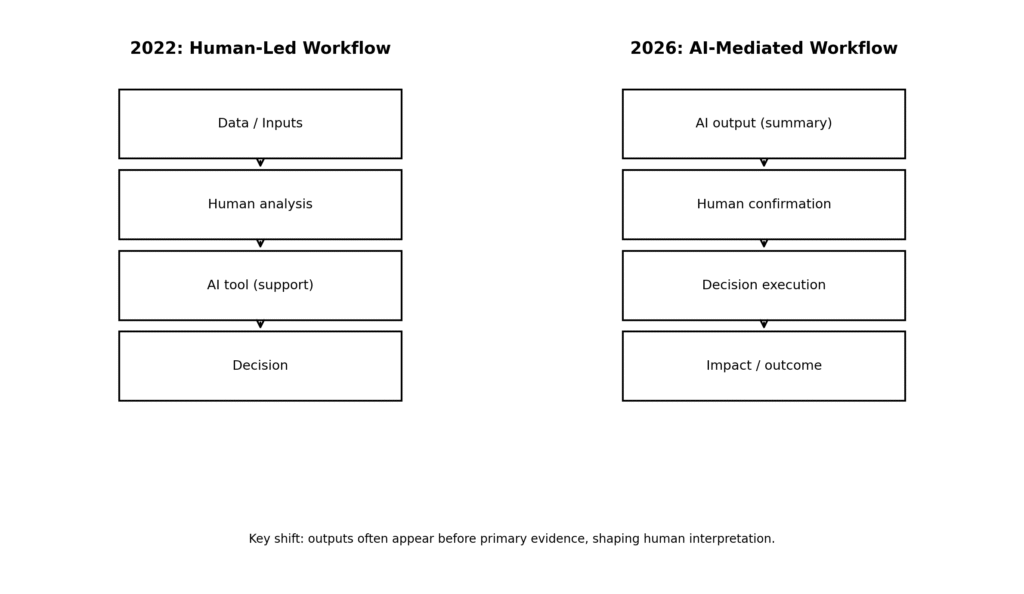

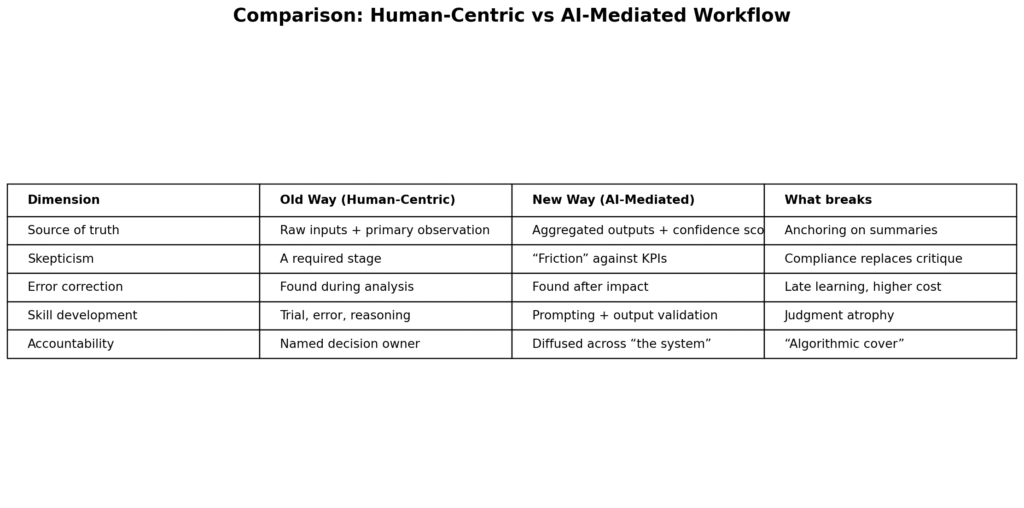

The shift is subtle but decisive: in modern workflows, the model’s output often arrives before the raw inputs. The ordering matters. Humans anchor early. Even disciplined professionals do. Once the AI summary hits the screen first, the “review” that follows often becomes a search for confirmation, not a hunt for truth.

This is not a vibes-based claim. It’s happening inside the adoption curve itself. Stanford HAI’s 2025 AI Index reports that 78% of organizations reported using AI in 2024, up from 55% the year before — a scale that makes overreliance an institutional risk, not a personal quirk.

https://hai.stanford.edu/ai-index/2025-ai-index-report

Where this shows up first

I see “AI-first” authority patterns most often in high-volume, high-pressure environments:

logistics routing and dispatch

claims triage and fraud scoring

compliance alert review

customer support escalation

recruiting screening stacks

These are settings where speed becomes a moral value. And speed quietly punishes skepticism.

This is also why governance and accountability matter so much. If you want a deeper read on oversight infrastructure — and why “someone will audit it later” is usually a fantasy — this connects directly to your AIChronicle piece: Who Audits the Algorithms?

https://theaichronicle.org/who-audits-the-algorithms/

The neuropsychology of the path of least resistance

AI fatigue isn’t just “too many tools.” It’s a human adaptation problem.

Questioning a model demands slow thinking. Effortful thinking. The kind you do when you’re solving a real problem, not when you’re clicking through a queue. Accepting the model’s output is easier. Faster. Socially safer. And when the workplace is built around velocity, the easy path becomes the rational path.

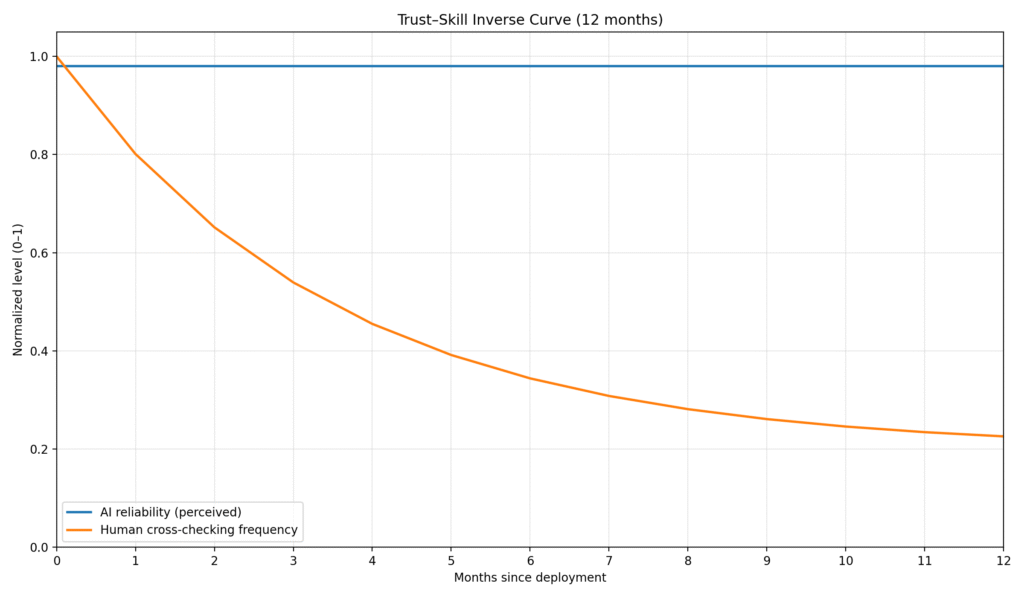

Human-factors research has a name for this: complacency and bias in human use of automation — when systems appear reliable, attention drifts and monitoring drops.

https://pubmed.ncbi.nlm.nih.gov/21077562

It gets worse when failures are rare. When automation is “almost always right,” checking feels wasteful, and teams start skipping it. That effect is discussed directly in work on misuse of automated decision aids (including complacency and automation bias).

https://commons.wmu.se/lib_articles/18

Here’s the uncomfortable truth I keep seeing: most enterprise AI rollouts train employees on features, not failure. Teams learn which buttons to click, not when the system is most likely to be wrong.

Stanford HAI has also highlighted why “just add explanations” often doesn’t solve overreliance. The real driver is how the workflow prices attention: if engagement costs time and time costs status, people disengage.

https://hai.stanford.edu/news/ai-overreliance-problem-are-explanations-solution

That matches what I’ve seen in audit rooms. If skepticism is treated as friction, your organization will eventually engineer it out.

“Algorithmic cover” is the hidden incentive nobody wants to admit

A big driver of AI fatigue is accountability diffusion.

If you challenge the model and you’re wrong, you wasted time and look difficult.

If you follow the model and it’s wrong, you have cover.

That cover is organizational currency. It turns personal risk into systemic risk. Nobody wants to be the outlier who ignored the dashboard. So people comply. Quietly. Repeatedly. Until “critical thinking” becomes something leaders praise in speeches and penalize in performance reviews.

This is where AI-mediated decision-making starts bending professional identity. You don’t become an investigator. You become an interface manager.

If you want to connect this to judgment under uncertainty — and the psychological trap of treating AI advice as “safer” than thinking — this pairs naturally with: AI Advice vs Human Judgment

https://theaichronicle.org/ai-advice-vs-human-judgment/

Interface design is trust engineering (whether designers admit it or not)

We need to stop pretending UI/UX is neutral. Many 2026 enterprise AI interfaces are designed to broadcast certainty:

green checkmarks

single confidence numbers

authoritative language (“recommended action”)

default-to-approve flows

What they often don’t show clearly:

data freshness

uncertainty ranges

drift flags

outlier clusters

“what would change the answer” sensitivity cues

That’s not a minor design decision. It’s behavioral conditioning. Interfaces train people. If the UI trains certainty, people behave with certainty — even when certainty is not warranted.

Policy frameworks are trying to catch up. NIST explicitly frames AI risk as a lifecycle governance problem: measurement, monitoring, transparency, and accountability aren’t “nice to have,” they’re core.

https://www.nist.gov/itl/ai-risk-management-framework

https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

In Europe, the direction is even clearer: the EU AI Act establishes obligations tied to risk tiers — which, in practice, pushes organizations toward documentation and oversight for high-risk deployments.

https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng

In practice, product teams are still rewarded for frictionless adoption. Governance teams are rewarded for “not blocking progress.” That dynamic produces glossy certainty interfaces and weak skepticism muscle. Regulation may force better behavior over time, but in 2026, many firms are still betting they can move faster than accountability.

Contrarian take: hallucinations aren’t the main danger

The media obsession with hallucinations is understandable. It’s visible. It creates screenshots. It feels like the “problem.”

But hallucinations are not the deepest threat to professional competence.

A hallucinating system can actually keep humans sharp, because it forces verification. People double-check. They argue. They stay engaged.

The real threat is the near-perfect system.

If a system is right 99.9% of the time, human judgment atrophies. Slowly. Then suddenly. The team can still “operate,” but only through the interface. When the model fails under novel conditions — a regime shift, an edge case, a new adversary tactic, a changed supply chain reality — the organization discovers it no longer knows how to think without the machine.

That’s cognitive bankruptcy.

This also connects to your broader theme of risk hiding in convenience: The Hidden Risks of Relying on AI

https://theaichronicle.org/the-hidden-risks-of-relying-on-ai/

Comparison table: the old workflow vs the AI-mediated workflow

What good looks like in 2026: skepticism by design, not by slogan

If you want to fight AI fatigue, don’t start with training slides about “critical thinking.”

Start with workflow economics.

Here are interventions I’ve seen actually work in real deployments:

Evidence gating: Before “Approve,” require users to select the supporting evidence points the model relied on (not just read a summary). If they can’t, they shouldn’t be approving.

Rotating challenger role: Assign one person per meeting to argue against the model’s recommendation. Normalize dissent.

Measure verification: Track cross-checks the way you track throughput. If skepticism isn’t measured, it will be sacrificed.

Rare-failure drills: Regularly simulate realistic model failures (stale data, drift, missing context). Build reflexes before incidents force them.

Notice what’s missing: “More explanations.” Explanations help sometimes, but only when the organization rewards engagement instead of punishing it.

The fastest teams in 2026 aren’t the ones that blindly accept outputs. They’re the ones that built repeatable skepticism into the workflow so verification doesn’t depend on heroics. That’s what “governance” should mean in practice: not a policy PDF, but a set of friction points placed where the organization is most likely to stop thinking.

Analyst’s verdict: what I’d bet on for 2027–2028

By 2027, the “honeymoon phase” of AI efficiency will end for at least one critical industry segment — I’d watch insurance claims and mid-market banking first. Not because of a single catastrophic failure. Because of accumulated drift: small errors, small incentives, small changes in data, and a workforce trained to trust the summary instead of the substrate.

By 2028, “human-in-the-loop” will stop being branding and start being compliance. We’ll see mandatory skepticism protocols: documented challenge steps, uncertainty displays, and audit trails designed for regulators and litigators, not just internal QA. The direction of travel is already visible in NIST’s lifecycle framing and the legal obligations formalized by the EU AI Act.

https://www.nist.gov/itl/ai-risk-management-framework

https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng

The most valuable professionals in 2028 won’t be the ones who can prompt best.

They’ll be the ones who kept the ability to say, calmly and correctly:

“This output doesn’t make sense. Show me the inputs.”